Figure 1 – User-centred Design Activities Conducted

Figure 1 – User-centred Design Activities ConductedImproving the User-Interface to Increase Patient Throughput

A. Gupta, J. Masthoff, Philips Research Laboratories, Redhill, UK

P. Zwart, Philips Medical Systems Ned. B.V., Best, The Netherlands

Keywords: Medical, Error, Guidance, Customisation, Learning, Task Analysis, User Interface, Help

Abstract

Philips Medical Systems is a leading supplier of equipment to the international health care industry. We have been exploring the potential for applying advances in user interface technologies (particularly customisation) in future medical X-ray systems. In this paper, we outline the rationale for this by looking at the trends in the health care industry, and the types and sources of error, which we have observed first hand in various hospital departments. We distinguish and discuss three ways to reduce errors: improved design of the user-interface, customised default parameter values, and active support for learning during system usage i.e. during examinations. We have based our work firmly on the principles of user-centred design: observations, task modelling, user involvement and prototyping have been undertaken.

Introduction

The research presented in this paper has been conducted in a collaboration between Philips Research Laboratories and Philips Medical Systems, with input from Philips Design. It is a three-year project that has been going on for a year.

As part of a team skilled in user-interface design, the authors of this paper from Philips Research were contracted by Philips Medical Systems to suggest improvements in the examination and control rooms. Our prior experience with the medical domain was rather limited. We needed to build up our knowledge of the clinical X-ray environment, so that we could identify real opportunities for improvement. Our customer did not see this as a burden – we were needed for our knowledge in the user-interface field and particularly in the methods underlying user-centred design, the customer provided the knowledge about the medical field. We therefore initiated a series of hospital observational studies, interviews, and training courses. We also needed to then conceptualise solutions that were technologically feasible. We organised a multi-disciplinary Design Workshop at which we held many brainstorms.

Structure of this paper

The structure of this paper is as follows. We begin by discussing what we mean by human error in the clinical domain. We then describe the observational studies undertaken. The process followed, methods used, and our findings, are described. The user-interface technologies that were judged to be applicable are then described. Summaries of all of the above were presented to participants at a Design Workshop as the raw material on which to base concept generation. The process we used is described. Additionally, the notation used to document the task model is also described. We then discuss a number of ways to reduce human error, namely improved design of the user-interface, default parameter values customised to the situation at hand, and active support for on-line learning. Customisation is the essential aspect and is described. We end with some conclusions.

Definition of human error in the clinical domain

Research on human error in safety-critical systems is generally concerned with the prevention and analysis of major accidents and near misses, concerning aircraft, trains, and oil and chemical plants. We are, however, not focusing on the extreme examples, but on more subtle, latent types of errors, where each occurrence in itself does not have catastrophic effects, but the cumulative impact on relatively less tangible aspects is considerable.

Leplat (as quoted in reference 1) states that "a human error is produced when a human behaviour or its effect on a system exceeds a limit of acceptability". Another definition of human error is "all the occasions in which a planned sequence of mental or physical activities fail to achieve its intended outcome, and when these failures cannot be attributed to the intervention of some chance agency" (ref. 2). Both of these definitions associate human error with not reaching a set goal ("exceed a limit of acceptability" and "fail to achieve its intended outcome"). So, in order to define human error in the clinical domain, we have to first assess what the goals in the clinical domain are.

Some important goals in the healthcare industry are to:

We take a broad view of error and define it as human behaviour resulting in sub-optimal achievement of the goals listed above. These errors are extremely likely to occur, but individually do mostly not have severe and obvious consequences. However, they pose a high risk even if the severity of their individual consequences tends to be low, as risk is defined as the probability or frequency of occurrence multiplied by the severity of the consequence.

According to our definition, the following are examples of errors:

It should be noted that it is very difficult to assess whether one of these errors has occurred, because of the necessary balancing between different aspects. For instance, taking less time for an examination may lead to less patient comfort and less accurate diagnosis. Applying a lower dose may lead to a lower quality of images.

There are many reasons why an error may occur:

Often a combination of these occurs: the clinical user does not use a certain function of the system because the design of the system does not make the purpose of the function clear.

The basis of good user-interface design lies in applying knowledge of users, tasks and the context of use. This is the essence of the user-centred design approach, which we have practised from the outset. The analytical activities undertaken are now described.

Figure 1 – User-centred Design Activities Conducted

Figure 1 – User-centred Design Activities Conducted

Analysis

The range of activities we undertook is illustrated in the process map shown in Fig. 1. We have built up domain knowledge via training sessions at Philips Medical Systems (PMS), observational studies in hospitals, interviews with PMS’ application specialists, and studies of manuals of some current Philips systems (the MD4 general radiology system, the BV300 surgery system, and the BH5000 cardiology system).

Organisation of observational studies: Visits were organised to five hospitals with which PMS has a special relationship. An application specialist of PMS who was very well acquainted with the hospital would accompany us. Application specialists are attached to hospitals and maintain frequent contact with clinical staff at all levels; they also deliver on-site training and support. The whole process was guided by a checklist (see Appendix).

Typically we would start and end a visit with a conversation with the application specialist. We were interested in the type of hospital, the specialisms of the department, the kinds of examinations conducted, the number and types of people working there, the types of systems used, the process used for introducing new systems into the hospital, trends in the hospital, and problems experienced and anticipated.

Next, we would be guided through the department and begin our observations; each observer typically doing about eight. During the examinations, we would stand in the control room, or the examination room wearing protective tunics, and observe what was going on. We would draw plans of the rooms, and record the actions of and communications between the clinical staff and patient, noting information present on walls, and ambient conditions, for example, lighting and noise. We would not only look at what happened during an examination, but also between examinations.

We questioned the staff present, namely radiographers, radiologists, cardiologists, and nurses. We were fortunate to be able to talk to very experienced staff and also to trainee radiographers and doctors. We would ask about the nature of their work, their roles, the problems they experienced, the changes they would like, the trends in their work, their training, and what they liked in their job.

Observational studies conducted: We have performed observations in hospitals in the Netherlands, Belgium, and the UK (ranging from film-based to a digital hospital). We have seen both diagnostic examinations and interventions, varying from general radiology, to MR, CT, and cardiology. We have also seen some other parts of the workflow, namely reporting and archiving. The observational studies are an ongoing activity, starting off as a way to get to know the domain, the users, their tasks, and the environment, and now becoming more specific relating to the prototype system we are developing.

|

A small bowel enema examination: -The patient is selected from the RIS list. -The examination-table is put in position. In the meantime, the patient is brought in. -Local anaesthetic is applied (using a spray). -A tube is prepared and inserted in the patient's nose. -The patient is put on the table, on his back. -The C-arm is moved. -The monitors are moved. -The lights are dimmed. -The table is moved up, the C-arm down. -An image is taken. -The tube is inserted by a radiographer in the patient's nose and down to the stomach while looking at fluoroscopy images on the ceiling mounted monitor. The fluoroscopy is operated by the other radiographer at the table operator’s control (TOC). -The one radiographer tells that the tube is in position. The other radiographer releases the fluoroscopy footpedal. -The intercom is used to inform the radiologist that room 7 is ready. In the mean time, the infusion with Barium is prepared. -The radiologist enters the room, puts on a lead tunic. - He looks at the paper in the control room and speaks to the patient. [ Various announcements are heard on the intercom.] -The infusion is attached to the nose tube. -They wait for the contrast to reach the right spot, some fluoroscopy is done. |

In the mean time, the patient is covered with a white blanket. -Two images are made. -They wait again. Fluoroscopy is used, images are made. The C-arm is moved, using the TOC. This is repeated a couple of times. -The patient repeatedly tries to look at the monitor, but has difficulty doing this as the monitor is behind his head. -The patient is put on his stomach. The tube in his nose is put in the right position, using a kind of scissors or tang. - An alarm sounds (a collision?). - The patient is put on his back again. The C-arm is moved. Fluoroscopy is used, an image is taken. - The patient is instructed on the position. The table and C-arm are moved. The radiologist looks at the monitors, then walks towards the patient to change something: he is pressing the Barium along. This is repeated. - A lot of changes in geometry take place, and images are being taken. At some point the radiologist says it is okay. - The table is moved down and the tube from the nose is removed. - The patient's face is cleaned. - The patient is helped of the table. - The monitors are moved to get them out of the way. - Forms are being filled in, with number of images taken, exposure duration, etc. - The room is cleaned. |

Figure 2 – Sample of an Examination Observation

Samples of information obtained: We have recorded each hospital visit in a report (on average 25 pages, typical sections: users, systems, environment, tasks, information, training, UI issues, trends). Some fragments are included below (see also Figures 2 and 3):

"There is considerable ambient noise in the examination room: an intercom system is in use for broadcasting announcements, motors and jets may sound from the X-ray equipment, the people present in the working area talk amongst themselves, music may be played on a hi-fi system. The noise is intermittent.

There is no daylight, only artificial light - incandescent or fluorescent is available, usually lighting is soft, it may sometimes be dim, lighting levels may be changed during an examination."

Figure 3: Sample Sketch of an Examination Environment and Picture of an X-Ray Set Up

Main observations: We have made the following observations during hospital visits:

Trends in the clinical domain: The following trends can be observed in the hospitals:

The above observations and trends are not exhaustive, but are illustrative of some of the material on which we based subsequent work.

Figure 4: Fragments of a Generalised Task Model for an Existing System

After each hospital study, we developed task models. This activity is now described.

Task Analysis and Modelling

A Task Model is a drawing that represents the tasks undertaken to achieve a goal. It is a hierarchical drawing as tasks may be decomposed into sub-tasks. It is read from left to right and captures the process used by the subject in going about his or her job. It is also independent of any technology, user-interface or other, as the level of abstraction is much higher than mouse and keyboard interactions. Task Models are of two types: those representing currently used systems, and those representing the target system to be designed. An example-fragment of the former kind is shown in Figure 4. We have based our task modelling work in part on the MUSE method described by Lim & Long (ref. 4). Note: The relation between a Task Model and a system is a subtle one: though a task model is rooted in one or more systems it is only a description of tasks, and is entirely independent of system-level details.

Benefits of task modelling:

Notation:

Task Ordering: Two types of ordering are distinguished in the task model diagrams: Sequential Ordering (indicated by a single horizontal line, tasks ordered from left to right) and Free Ordering (indicated by a double horizontal line).

Suppose a task A consists of subtasks B, C, D, and E. Figure 5 indicates that the subtasks should be done in sequential order: B followed by C followed by D followed by E.

Figure 6 indicates that the ordering of the subtasks is free, i.e., unconstrained: B, C, D, and E can be done in any order.

Figure 5: Sequential Ordering

Figure 6: Free Ordering

Figure 7: Mixed Ordering

Figure 7 shows a mixture of sequential and free ordering: it indicates that B should be done before C and D, which should be done before E. The order in which C and D are done is not important.

Repeatable tasks: These are indicated by multiple vertical bars connecting a task to its parent task.

Optional Tasks: These are represented by boxes drawn with dashed lines.

Location of tasks: As we are only interested in two locations (examination or control room) we have used simple colour coding in the top of the box to represent this property. Brown (which prints as a dark shade in grey-scale) represents the examination room; blue (a light scale) represents the control room. An absence of colour implies no restriction on location.

Person: This indicates any demarcation on tasks amongst radiologists and radiographers. Red (which prints as a dark shade in grey-scale) represents tasks done by the former; green (a light shade) the latter.

UI-technology: Customisation

Reflecting on our observations, it occurred to us that there was a need for more flexible systems – unconstrained by requirements to be used at a fixed location, or by certain kinds of users, or by fixed interactions. Additionally, there was potential for a system which could be pro-active in enhancing the proficiency of the user. Customisation technology is the approach we followed. The Microsoft Office Assistant is an example of customised assistance (in this case of context-sensitive help). We can expect professional users to expect such features in the systems they use, after exposure to them in Word processing systems. We now discuss customisation in the user interface.

Customisation is the process of adapting a system’s behaviour (by the designers i.e. system developers, by the system itself, or by the user) to suit the individual or group using it. In a sense, customisation takes place in each system design. The important variables are to whom the system is customised, what is customised, and how the customisation is done. These are discussed in turn.

To whom is it customised: Customisation is normally aimed at "the customer". In the medical domain, this may be the radiology department of a certain hospital. Each department may have different wishes with respect to the kind of functionality required, may want to use the system for different tasks, may have its own procedures, its own terminology, etc. Each customer can also have a different environment in which the system will be used, for instance, different lighting and noise conditions, different cultural background, etc. Usually a system is designed with certain prototypical customers in mind, having different versions of the system for different categories of customers, and providing options to configure a system to the wishes of the customer. In the medical domain, for instance, different versions may be made for the different countries (or regions), or for low-end and high-end applications, while there may be options for incorporating extended functionality. As every customer tends to be different (and no rough categorisation will be perfect) more customisation may be needed than can be achieved through these means.

Even for one customer (say a certain radiology department), many people may be involved with the system (like radiographers, technicians, administrators, radiologists, surgeons, nurses, etc.). The satisfaction of the customer then depends upon the satisfaction of a group of people, who may have different (sometimes even conflicting) requirements and preferences. Depending on their rôle, they will want to use the same system for different tasks, they may have a different level of education and experience, etc. To a certain extent, it may be possible to use stereotypical roles, and customise the system to these roles. However, these roles and their corresponding characteristics (like level of education) may differ per customer group (for instance, the education level of radiographers and the tasks they are permitted to perform differ according to national regulations).

Differences may also exist between individuals having the same role. There is a lot of literature on individual differences between people that may affect their operation of systems. Straightforward differences are, for instance, the level of experience, and the level of domain knowledge. Other differences mentioned are differences in psycho-motor skills, learning ability, understanding, expectations, motives, cognitive strategies, cognitive abilities, preferences, etc. These individual differences may impact the best way to present information for a certain user on the screen, the amount of information needed, the input device preferred, etc.

Over time, the system will be used to perform different tasks under different conditions. For instance, in the medical domain, different patients are examined, and different types of examinations take place. Customisation can also take place to these different situations over time.

In summary, a system can be customised to the environment in which it is used, to the (group of) users that operates it, to the tasks that these users perform in general and at a certain moment in time, to the situation at hand.

What is customised: Many aspects of a system can be customised. For instance, in the Appeal tutoring system (ref. 5), various aspects were adapted at run-time to the individual student. Examples are the navigation path, timing and amount of instruction, form of information (e.g., using formulae or pictures), (amount, tone and timing of) feedback to the student, and the content of exercises. In an adaptive component of a fighter aircraft (ref. 6), the system adapted task allocation on the basis of the pilot's state, system state and world state.

In general, a system gives the user the opportunity to give input, i.e., to make choices, and presents output depending on the choices made. For instance, an X-ray system gives the user the opportunity to set several parameters (like dose, geometry position, shutters), and the system produces X-ray images accordingly. As such, a system can be said to consist of:

All of these aspects can be customised. For instance, the preferred value of a parameter (such as Brightness) can depend on the task (namely the particular examination type), individual preferences of the user, the environment (such as the lighting condition in the room) and the values of other parameters (like Image Polarity). The default offered to the user could reflect this. Similarly, the preferred way of interaction may depend on the availability of hardware (like microphones), the examination type, and the experience and preferences of the individual user. For instance, during an intervention the radiologist has to be sterile and the use of speech input for non-critical tasks may be preferred. On the other hand, users who have operated a certain function in a certain way for a long time will not always like to change.

The preferred navigation structure may depend on the user’s rôle (for instance, a nurse normally does not need advanced functions), the current task, and the experience of the individual user.

How is it customised: There are several ways in which customisation can take place (see also reference 7):

Mostly, a combination of these options is preferable.

In every run-time customisation process four phases can be distinguished (ref. 8): in the first phase a customisation is suggested, in the second phase a number of alternatives are offered for the customisation, in the third phase a choice is made from the alternatives, and in the fourth phase this choice is carried out. Each phase of the customisation can be performed by the system or the user.

If the system performs all phases, the process is called Self-Customisation (e.g., ref. 9). In case the user performs all phases, the process is called User-Controlled Customisation (e.g., ref. 10). We are most interested in hybrid situations, in which depending on the situation, the phases can be performed by either the system or the user. A primary reason for intermediate situations is that the user may have more information than the system in some areas, especially concerning his or her own preferences, while the system may have more information in other areas, especially concerning its options. The system may, for instance, suggest alternative available methods for increasing Brightness from which the user can make a choice. A second reason for intermediate situations is that the user may feel more at ease knowing that he or she is in control (ref. 5).

Constraints: There are several issues to be considered when designing self-customising systems (see amongst others ref. 11).

One potential problem is that it might become more difficult for the user to learn to operate a system, if it changes over time. The acceptability of the system may also decrease, as the user might not understand the system's behaviour. To compensate for this it has been suggested that the user be given an explicit model of the customisations being undertaken by the system. For instance, the user may be notified that the position of items on a list may change according to their most frequent choices. In this way the user can anticipate, or at least rationalise the actions being taken by the system.

Norcio & Stanley point out that users may feel a lack of control if the system starts to a make choices on their behalf (ref. 12). This may be prevented by giving them at all times the chance to override the system's decisions, or by only giving them suggestions. An alternative is to give the users the opportunity to turn off automatic customisation without prior user confirmation. In this way they have ultimate control, the knowledge of which may be sufficient for them to accept customisations. Also, a system should know when and when not to consult the user about a certain customisation.

Related to the previous issue is the issue of safety and legal responsibilities. Especially in the medical domain, these issues are very important. The operator should have the final responsibility for the settings chosen, if these settings have an impact on the safety of a patient.

In order to self-customise, a system has to gather and store data about the user, his tasks and preferences. There are, of course, privacy issues related to this. For instance, not every radiographer might appreciate it if a supervisor could inspect the way they normally use the system. So, there have to be rules about who can access which data.

Users may also not like to be categorised. Great care has to be taken when using stereotypes.

Concept generation

After the analysis phase (in which we had already identified some options for improvement), we started to generate concepts for new systems. For this, we needed individuals skilled in ergonomics, industrial design, interaction design, and graphic design. We were able to call on colleagues in Philips Design, for this skills set.

First we organised a workshop involving four designers (three with experience in consumer systems like audio systems, one with experience with medical systems), three researchers, an application specialist of PMS, two people from PMS Predevelopment, and a very experienced radiographer. The purpose of the workshop was to come up with far-out ideas. This is typical for a research project. In a more development-like project, you would also come up with concepts, using focus groups etc, but these concepts would (intentionally) have shorter time horizons for realisation.

Next, five concepts were worked out in more detail, into storyboards, and made more realistic. The designers involved (both without previous experience in the medical domain) were brought up to speed by reading the hospital visit reports and studying the draft generalised task model. The task model was used as a basis for this work: we identified which sub-tasks were closely related to a concept and visualised how the concept could impact these tasks. The storyboards were presented to PMS and we used some criteria (like technical feasibility and marketing potential) to decide which concepts to proceed with.

Figure 8 shows some fragments of storyboards.

Figure 8: Fragments of Two Story Boards

Ways to reduce human error

From our hospital observations, it can be concluded that current systems are being used sub-optimally. This is a cause for concern, as trends in the clinical domain indicate that sub-optimal use will continue to increase in line with system functionality and complexity. According to our definition of human error in the clinical domain, sub-optimal use is a main source of errors. In order to ensure optimal use, the clinical users need to know:

The system needs to support the various users in performing their tasks and obtaining the knowledge relevant in a certain situation. We try to achieve this by a combination of:

These are now discussed in turn.

Improved design of the user-interface: Designing a user-interface requires us to answer the following questions: (1) which user tasks to support, (2) which functions to provide in order to support these tasks, (3) what navigational structure and screen layout to use to make these functions available, (4) how to enable the user to operate these functions, and (5) what information to present to the user about the functions.

The first question leads to the construction of a task model of the target application (as discussed in the task modelling section). This task model is based on (improvements of) the generalised task model of existing systems and the concept generation activities. A fragment of a task model for a target application supporting Viewing and Post- processing of images is presented in Figure 9. The notation is similar to the notation above, except that location and person indications have been omitted, as all the tasks are intended to be performed in the same location and by any user type. We are currently in the process of validating the task model with application specialists and clinical users.

The second and fourth question lead to the determination of which functions to provide to a user to assist with the leaf-tasks of the task model and which interaction to provide for these functions. To make this decision, we have analysed the way in which these tasks have been implemented in existing systems (such as existing X-ray, surgery and cardiology systems, but also completely different systems like drawing packages). Such an analysis leads to a choice inspired by best practise across many domains, as much as the need to balance consistency with existing practise in the medical domain.

Figure 9: Fragment of a Task Model for a Target Application

The third question (about navigation structure and screen layout) can be answered on the basis of the task model, the functions and interactions chosen, and the available space on the interface. The ordering in the task model influences the navigation structure and screen layout of the system as follows. A task that has to be performed before other tasks, might be better represented on an earlier screen. Tasks that can be performed in any order should preferably be present on the same screen. In western systems, tasks on the same screen will normally be ordered from top to bottom and from left to right. For example, in the case of a sequential ordering of tasks A and B, the functions to enable task A should either be on a screen that precedes the screens with the functions of task B, or they should be to the top (and left). It should be noted that even in the case of free ordering, the layout of the task model drawing may lead to conclusions from, say, the screen-layout designers: there is a high probability that in a free ordering of a number of tasks, the order from left to right will be used as a normal (though not required) ordering. Therefore, we have ordered the tasks in the task model within a free ordering from left to right according to a likely sequence. Notes have been added to the task model to indicate more than one likely sequence, or to state conditions under which a certain sequence is likely to occur.

The grouping of subtasks in the task model, reflects the fact that certain tasks are conceptually more related to each other than to other tasks. In the user interface this could result in visually grouping functions related to those tasks (for instance by proximity).

The fifth question (what information to present about the functions) is addressed below in the section on active support. In principle, all relevant information, which the user does not know has to be presented. The different types of information have been discussed above.

Customised default values: The main input of a user to a system can be seen as the setting of parameter values, such as, for instance, choosing brightness and contrast values, determining the current image by image navigation, etc. Often, a system will provide the user with default values (these may also be the last values used), which the user can modify to his own choice. The closer the default values are to the preference of the user, the less effort is required from the user to change them. Ideally, the user does not have to do anything, as the values offered by the system already reflect his preference. This is very difficult to achieve, as the situation in which the parameters have to be chosen changes continuously, for instance, as the user is viewing different types of images. However, as a step in this direction, we have developed a so-called Customisation Engine, which determines the default values (and ranges between which the parameters can be modified) on the basis of the current situation, and the preferences of the (individual) user.

Figure 10: Schematic Representation of the Customisation Engine

The Customisation Engine uses (see Figure 10):

The situation in which a rule is applicable can consist of a number (concatenation) of situational conditions. Each situational condition specifies a range for a certain parameter. For instance, the following can be situational conditions: "Examination type in {Barium, ERCP, Skillet, Myelo}", "12<=Brightness<=25", "Subtraction=Off", and "Physician =Dr Spock".

It is possible that more than one rule is applicable in a given situation. In that case, the intersection of the ranges of suggested or allowable values for the parameter is used. If this intersection is empty, the rule that uses the most situational conditions is applied.

The history is used to learn the preferences of the user at run-time. For instance, when the user repeatedly increases the Brightness value of images of a certain type then it is concluded that the user may have a preference for a higher value in that situation. The speed at which the system learns is directly related to the number of history instances it takes into account (the more instances the lower the speed). If the learning speed is high then customisation takes place rapidly but is prone to misunderstand the user. If the learning speed is low then customisation is slow but more accurate. The learning speed should be customisable by the user (or the system could learn it itself based on observation of correct and incorrect customisations in the past).

It should be noted that no deep modelling of the user takes place, but that decisions are almost directly based on the data stored in the history.

The use of rules described above is not only applicable when determining the default values and ranges of the parameters. It can also be used to customise other types of parameters. For example, interaction style parameters (e.g., does the user prefer touch or speech), implementation parameters (e.g., what is the maximum time between two touches for the system to regard them as part of one action), and navigation structure parameters (e.g., "if Subtraction is off then Availability of Pixelshift function is false"). Learning the preferences of the user through observation is, however, more difficult and requires different mechanisms, because preferences of the user can only be determined as above if the user is presented with a choice.

Interaction for customisation: As mentioned above (see sub section "Constraints"), it is very important that the user keeps feeling in control, understands the adaptation taking place, and is not hindered in his learning of the interface by continuous changes. Therefore the interaction style used for the customisation – i.e. the way customisation is presented to the user and the way the user is involved- is crucial.

In the description of the Customisation Engine above, various moments can be seen at which such an interaction might occur:

A problem we have found (during informal evaluations) is that it is difficult for users to realise that changing a rule during a certain examination may have implications on other similar examinations in future (even though this was clearly stated in the question asked to the user). Therefore the option to change rules when the user is not busy with a specific examination seems preferable. We still have to conduct user-evaluations to see which option or combination of options is the best.

The acceptability of automatic interdependency changes has to be investigated, especially when it concerns automatic changes of a parameter that the user has already explicitly set. (For instance, the user sets the Brightness value, then determines to change the Polarity, which has a possible implication on the Brightness value.)

We have the hypothesis that the third option is the best, but we have to evaluate this in practice.

In addition to these two moments of interaction, there is also the explicit changing of rules by the user. We have explored a way to enter knowledge that may be more familiar to clinical users than the use of rules: the user is presented with prototypical images and can change the appearance of these images, or chose between alternative appearances of images. Rules are deducted on the basis of the results. This work is still in a preliminary phase.

Active support for the learning process: It is impossible to give a user all the knowledge mentioned above during a short training period. However, more training time is unlikely: users generally have to learn on the job. Therefore, we are working on active support by the system of the learning process. The support has to be highly customised, depending on the hospital, the experience and preferences of clinical users, the examination being conducted, and user roles.

There has been quite some research into the design of intelligent help (see, e.g., reference 13), focusing on providing the users with good ways to ask questions and on volunteering answers when the user is observed to have a problem. In that research, the user-interface is seen as a constant factor. We have taken a different approach, and are focussing on changing the user-interface to increase learnability. We see both approaches as being complimentary.

Learnability is mainly related to efficiency and effectiveness over time. Preferably, the system should be highly intuitive to use the first time, i.e., have an immediate high effectiveness without much effort on the side of the user, and efficiency should increase over time, leading to a highly efficient system for experienced users. Moreover, systems should support the rapid progress of users from initial to experienced level.

In the system we consider (and this holds for most systems), only a limited amount of space is available for the presentation of functions and information. Nevertheless, we know for the X-ray viewing and post-processing domain that the users want many functions to be available at the same time, without having to take extra navigational actions (so, in manner of speaking "within one button click"). By using a good user-interface design, and making only those functions available which are appropriate in a certain situation (see section above and Customisation section below) it is to a certain extent possible to meet this user wish. If we include the use of speech commands to operate functions, it is even possible to have functions available that are not immediately visible on the system. However, to present all allowable functions on the system or hide them by using speech commands, has a rather negative impact on learnability. There is insufficient room to explain what all the functions do, and how to operate them, and this is particularly acute when the user is presented with a wide choice. So, our concern for novice users conflicts with our desire to provide highly efficient systems to experienced users.

One option may be to provide separate interfaces for novice and experienced users. However, this has only limited advantages: a user who is familiar with the use of certain functions (such as setting Brightness and Contrast) may never have heard of other functions (like Pixelshift) which can be very useful for his current situation (say, performing a Subtraction with motion artefacts). This is something we have actually observed in our hospital visits. The option of two separate interfaces also poses the danger that the gap between novice use and experienced use becomes too large.

What we would like, is that, for instance, when the user is performing a Subtraction, and has not used Pixelshift before, the system indicates that this function can be used to remove motion artefacts, and how to use it. This means that we will not use two separate interfaces, but one interface which gradually changes to reflect the experience of the user (i.e., the navigation structure and information is customised to the experience of the user).

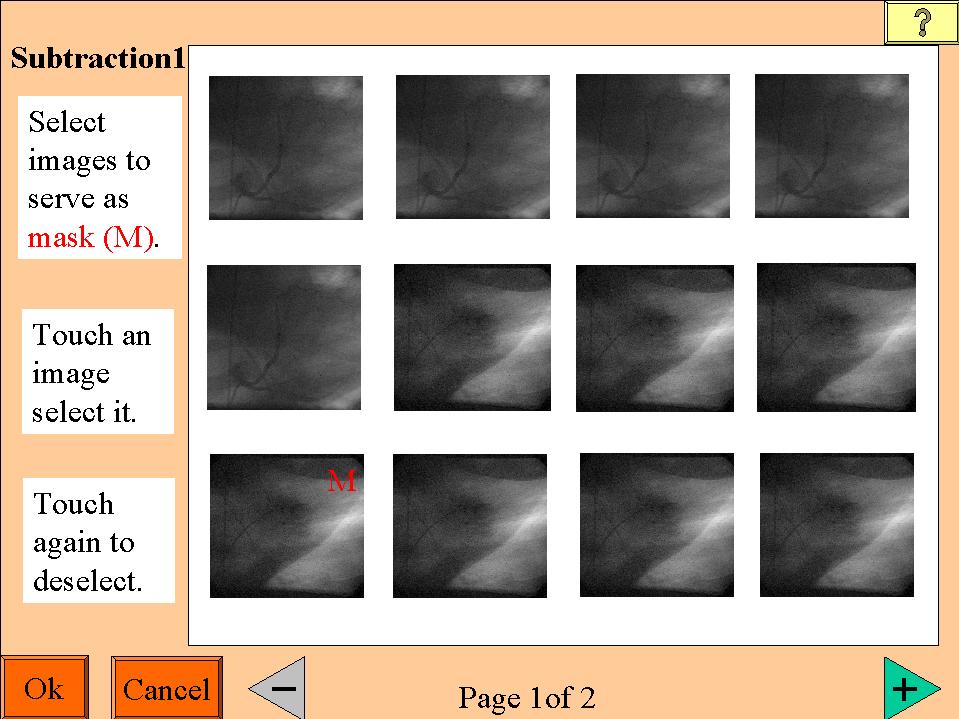

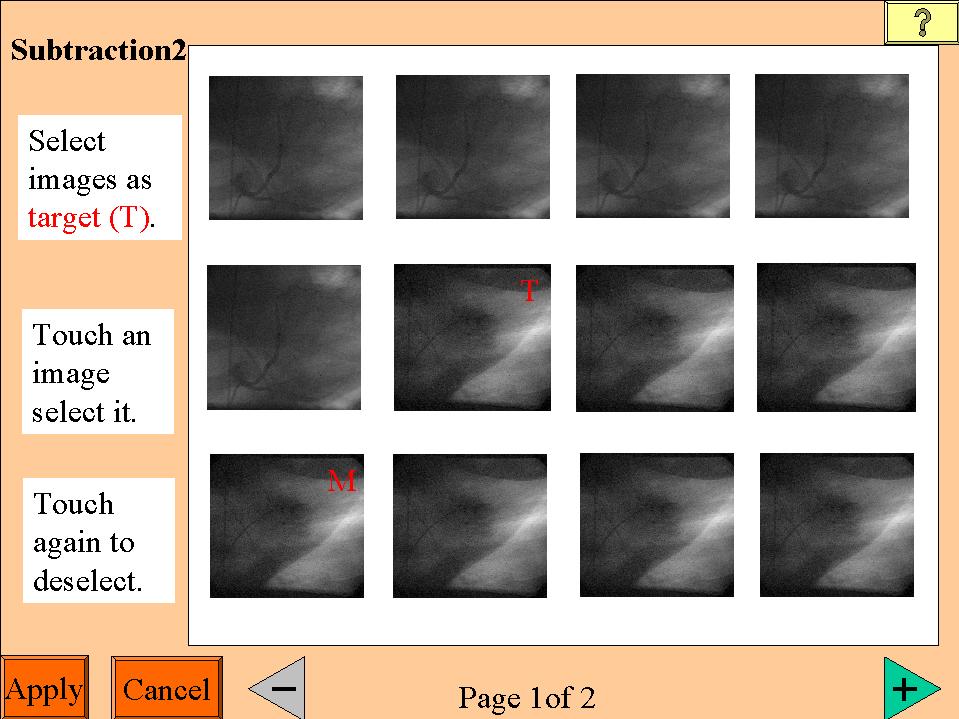

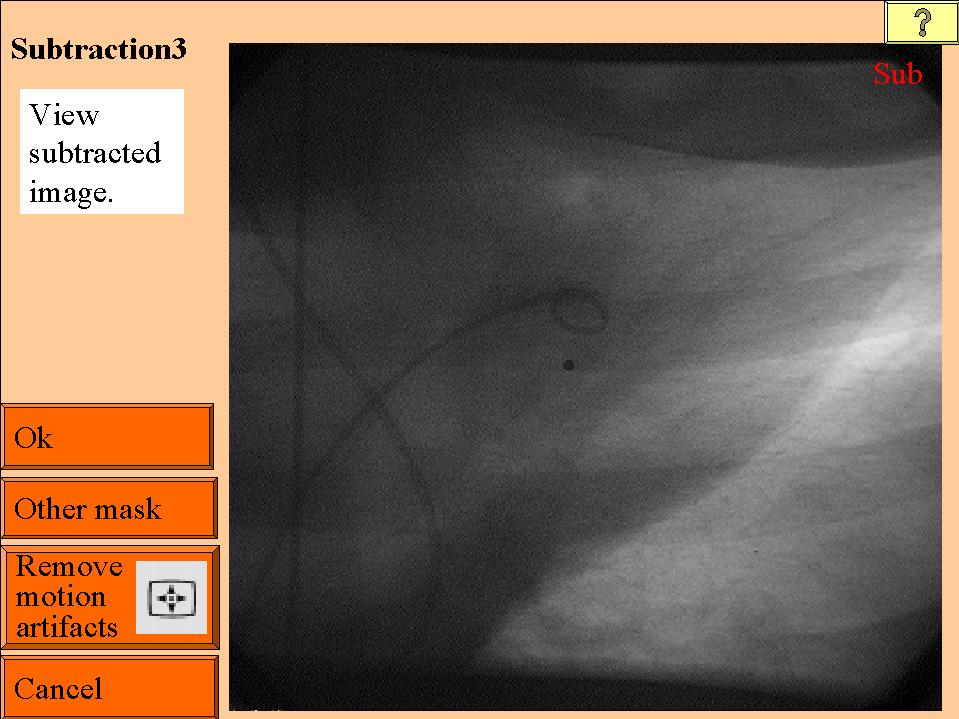

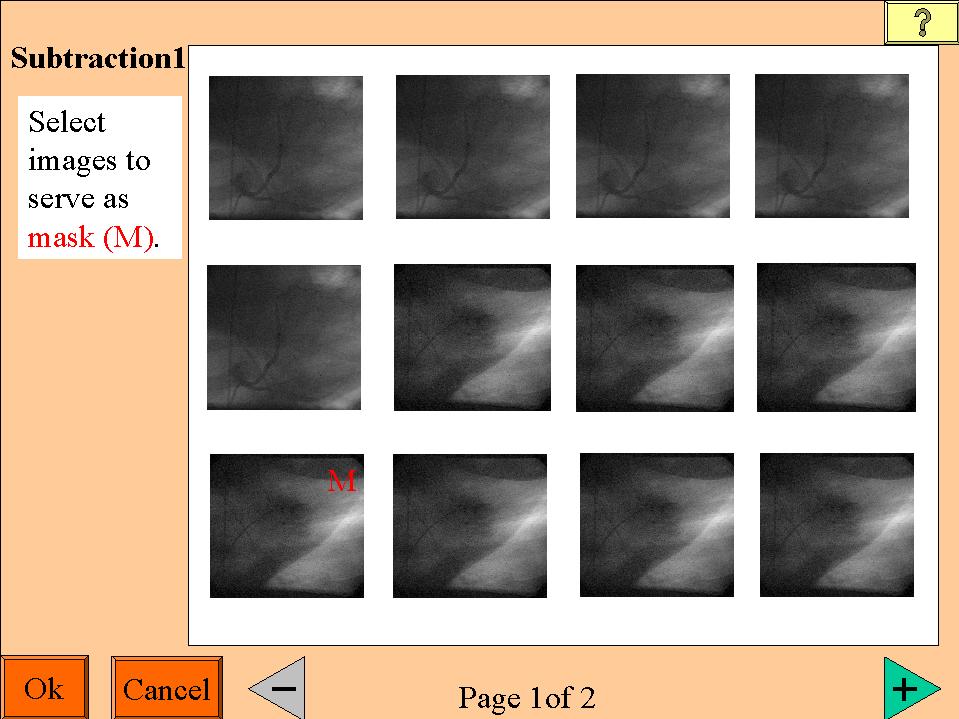

Figure 11: Example of Active Support for the Learning Process

Hint: use your browser to open the image for a closer look

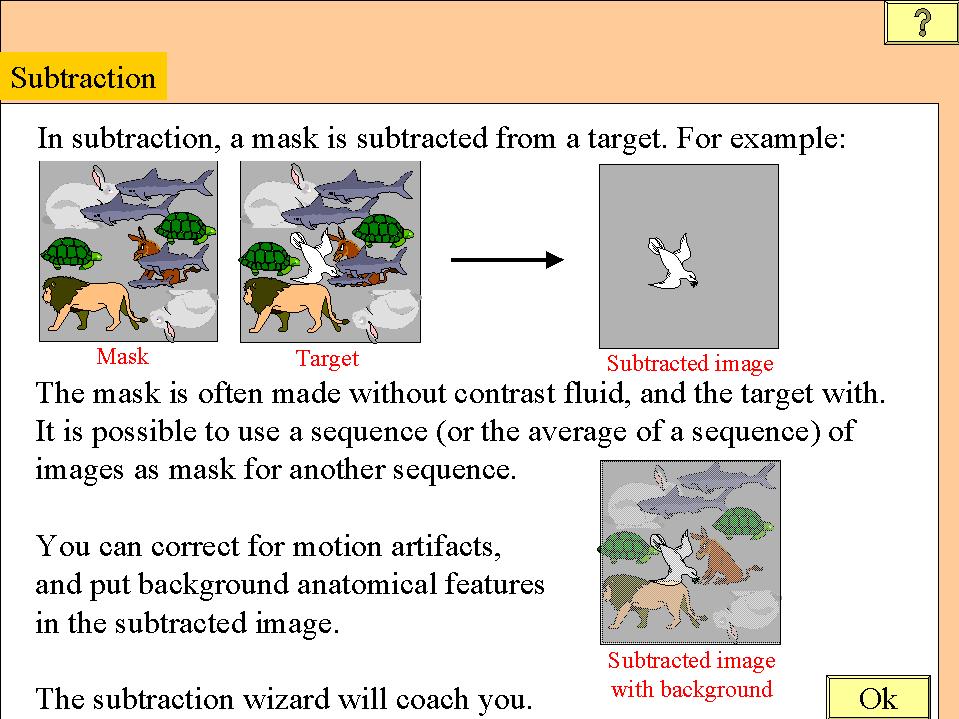

The (simplified) example illustrates how this could work (see Figure 11, note that it uses a touch sensitive screen). If the user is not familiar with the concept of subtraction then this concept is introduced (image 1). Next, the user is guided through the subtraction task. At each step, information is given on what he should do, how he should do it, and how he can correct mistakes (images 2-4).

Note that the initial sequence presented (images 2-4) is directly based on the task model (see fragment of the task model in Figure 10). Initially the user is forced to do the tasks in the task model from left to right, even though the model allows free ordering. When the user gets more experienced, the free ordering is introduced in the user interface (compare images 2,3 and 6). Note also that the information presented reflects the types of information mentioned above. What information is presented to the user depends on his experience. If the user has never used a certain function before (and not seen the information related to that function recently), then information regarding the purpose of the function is given, and the user is instructed how to operate it. More detailed information regarding the steps to take is given only when needed. For instance, if the user already has experience with selecting images, as part of another task, then no information is given anymore on how to (de)select an image (compare images 2 and 5). The user can get this next level of information if he wants it after all, by explicitly asking for it with the help function (available from the button marked "?"). We envisage that users will also be able to explicitly select the level of information they like for certain functions. This enables experienced users to still use more structured interfaces if they prefer that.

Conclusions

Our main interest is in exploring how new user-interface technologies can be applied to the design of medical systems, so as to reduce sub-optimal system usage in the X-ray examination and control rooms. This has a direct impact on patient throughput, which is expected to continue to be an important measure in the healthcare industry. We have described how we have applied user-centred design methods basing our work on first-hand observations conducted in hospitals, and on Task Analysis and Modelling.

Various problems have been observed during field studies. In this paper, we have suggested a number of ways these could be addressed:

Our work is ongoing; we are presently in the midst of building an integrated research prototype, which will be tested in usability evaluations.

Appendix: Checklist for hospital visits

1. Background

Obtain or develop a global organisation model.

2. Workflow in X-ray examination

3. Examination environment

4. Information

5. X-ray acquisition procedures

6. The way the system can be operated

can still be improved?

7. Patients

8. Introduction of new equipment

9. Training

10. General

11. List systems in the hospital (full names and versions) and the software running on these.

(When talking to an operator, always ask what system is referred to.)

Additional questions related to Image viewing and post-processing

During which parts of the examination are images viewed?

When do they use the monitors in the Examination room, when do they use the monitors in the Control room?

What image processing and viewing functionality is used, why, where, when, and by whom? Ask about:

Are images stored during the viewing process?

Hardcopies versus digital images

Ideas for improvement

Mention/request any other ideas.

Acknowledgements

We would like to thank the application specialists who accompanied us in the hospital visits: Erik Vossen, Theo Loohuis, Jacques Lukassen, and Peter Sutton. Thanks are due to the following designers: Horland Hudson for organising the workshop, Job Rutgers and Jude Rattle for their work on the scenarios, and Sue Coles for her work on interaction styles for customisation. Boris de Ruyter was involved in some discussions on the notation for the task model. Paul Gough gave comments on an earlier version of this paper. This project was supported in PMS by Eric von Reth and Franklin Schuling.

References

Amsterdam, 1993.

Biography

Ashok Gupta, Philips Research Laboratories, Cross Oak Lane, Redhill, RH15HA, UK. Telephone: +44 (1293) 815647, Fax: +44 (1293) 815500. Email:

gupta@prl.research.philips.com.A. Gupta is a Principal Scientist at Philips Research Laboratories where he has been working since 1988. His primary research interests are in user-centred design for professional applications, user-interface process assessment, requirements specifications, and software process improvement. In addition to medical, he has worked in the consumer electronics, and semiconductor domains.

Judith Masthoff, Philips Research Laboratories, Cross Oak Lane, Redhill, RH15HA, UK. Telephone: +44 (1293) 815383, Fax: +44 (1293) 815500.

Email:

J. Masthoff has experience in artificial intelligence, situated cognition, interactive instruction, user-centred design and experimental evaluation.

Both A. Gupta and J. Masthoff are working in the Software Engineering and Applications Group, focusing on research into mobile and personal user-interfaces.

Paul Zwart, Philips Medical Systems Nederland B.V., Veenpluis 4-6, 5680 DA Best. Tel: ++31 (0)40-2763024, Fax: ++31 (0)40-2765657.

E-mail:

P.Zwart has been with Philips Medical Systems since 1985. His interests are in image compression, medical image processing and user interfaces to medical systems.