DESIGNING FORMS TO SUPPORT THE ELICITATION OF INFORMATION ABOUT INCIDENTS INVOLVING HUMAN ERROR

Chris JOHNSON

Department of Computing Science,

University of Glasgow, Glasgow G12 8QQ. Scotland.

johnson@dcs.gla.ac.uk, http://www.dcs.gla.ac.uk/~johnsonABSTRACT

There has been a rapid growth in the use of incident reporting schemes as a primary means of preventing future accidents. The perceived success of the FAA’s Aviation Safety Reporting System and the UK Confidential Human Factors Incident Reporting System has led to similar systems being set up within many different industries. The value of these systems depends upon the forms that are used to elicit information about potential failures. Poorly constructed forms can lead to confusion about the information that is being requested. Relatively little is known about the impact of form design upon reporting behaviour. This paper, therefore, uses a comparative study of existing incident forms to identify key decisions that must be made during the design of future documents that elicit reports about human "error" and systems "failure".

KEYWORDS

Human error, incident reporting, form design.

1. Introduction

Incident reporting systems offer a number of benefits. For example, incident reports help to find out why accidents DONT occur (Reason, 1998). The higher frequency of incidents also permits quantitative analysis. It can be argued that many accidents stem from atypical situations. They, therefore, provide relatively little information about the nature of future failures. In contrast, the higher frequency of incidents provides greater insights into the relative proportions of particular classes of human "error" and systems "failure". Incident reports also provide a reminder of hazards (Hale, Wilpert and Freitag, 1997).. They provide means of monitoring potential problems as they recur during the lifetime of an application (Johnson, 1999). They can be used to elicit feedback that keeps staff "in the loop". The data (and lessons) from incident reporting schemes can be shared. Incident reporting systems provide the raw data for comparisons both within and between industries. If common causes of incidents can be observed then, it is argued, common solutions can be found (van der Schaaf, 1996). Incident reporting schemes are cheaper than the costs of an accident. A further argument in favour of incident reporting schemes is that organisations may be required to exploit them by regulatory agencies.

Most of these benefits depend upon the elicitation of information from members of staff who either observe or are involved in particular incidents. However, the design of incident reporting forms has remained a focus of debate amongst the handful of research groups that are active in this area. Meanwhile, hundreds of local, national and international systems are using ad hoc, trial and error techniques to arrive at appropriate forms. This paper, therefore, describes the results of a comparative study of incident reporting forms.

2. Sample Incident Reporting Forms

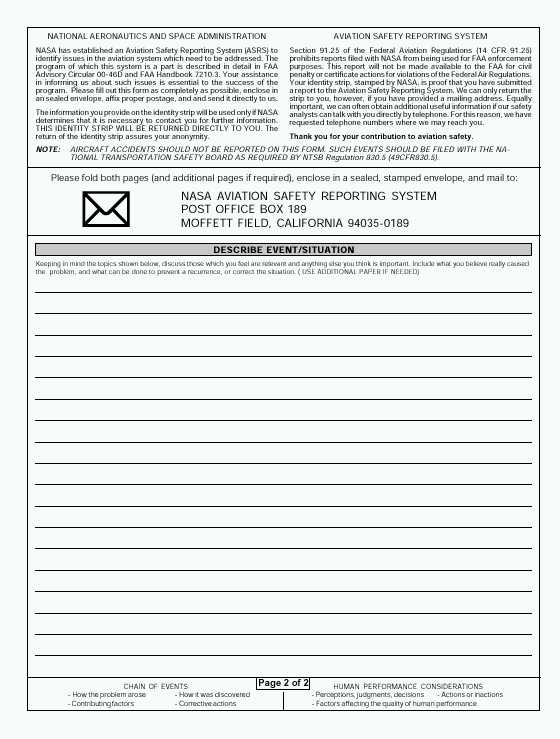

It is important to stress that there are several different approaches to the presentation and dissemination of incident reporting forms. For example, some organisations provide printed forms that are readily at hand for the individuals that work within particular environments. This approach clearly relies upon the active monitoring of staff who must replenish the forms and who must collected completed reports. The form shown in Figure 1 illustrates this approach.

Figure 1: Incident Reporting Form for a UK Neonatal Intensive Care Unit (Busse & Wright, in press).

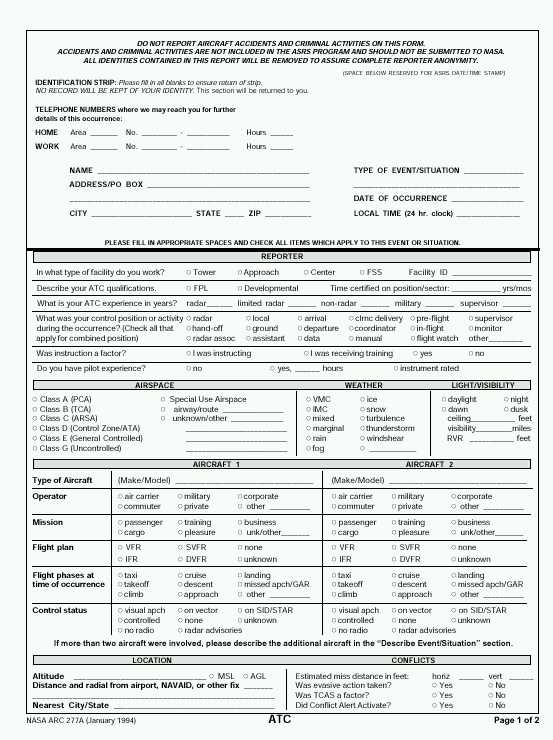

The document in Figure 1 was developed for a Neonatal Intensive Care Unit and is based upon a form that has been used for almost a decade in an adult intensive care environment (Busse and Johnson 1999, Busse and Wright, in press). As can be seen, this form relies upon free-text fields where the user can describe the incident that they have witnessed. This approach works because the people analysing the report are very familiar with the Units that exploit them. In contrast, national and international schemes typically force their respondents to select their responses from lists of more highly constrained alternatives. For example, NASA and the FAA’s ASRS scheme covers many diverse occupations, ranging from maintenance to aircrew activities, in the many different geographical regions of the United States. This has a radical effect on forms such as that shown in Figure 2 which is designed to elicit reports about Air Traffic Control incidents. Pre-defined terms are used to distinguish between the many different control positions and activities that are involved in an international, air traffic control system. Much of this activity information remains implicit in local forms such as that shown in Figure 1.

Figure 2: ASRS Reporting Form for Air Traffic Control Incidents (January 2000).

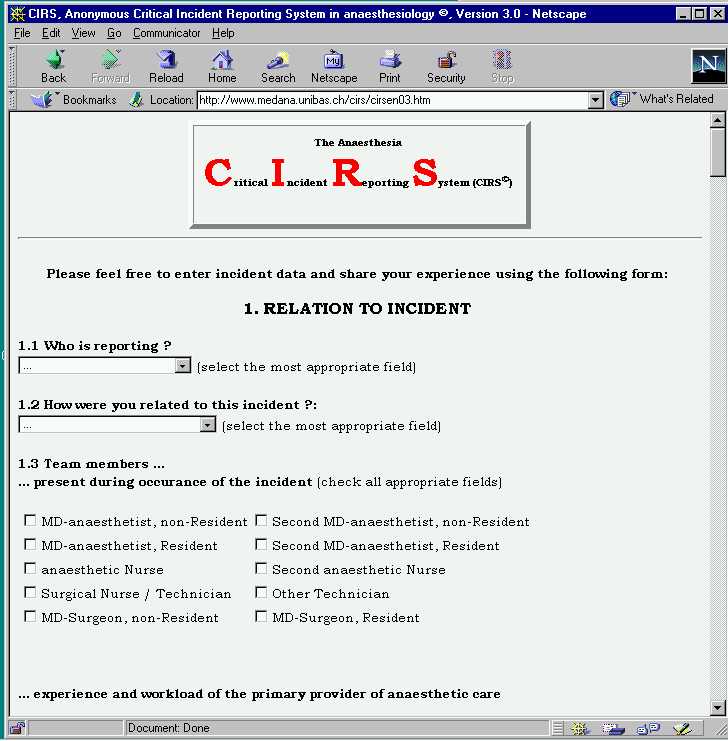

The local reporting form shown in Figure 1 is distributed by placing paper copies within the users’ working environment. In contrast, ASRS forms are also available over the World Wide Web. They can be downloaded and printed using Adobe’s proprietary Portable Display Format (PDF). The geographical and the occupational coverage of the ASRS system again determine this approach. The web is perceived to provide a cost-effective means of disseminating incident reporting form. ASRS report forms cannot, however, be submitted using Internet based technology. There are clear problems associated with the validation of such electronic submissions. A small but increasing number of reporting systems have taken this additional step towards the use of the Web as a means both of disseminating and submitting incident reporting forms. For instance, Figure 3 illustrates part of the on-line system that has been developed to support incident reporting within Swiss Departments of Anaesthesia (Staender,. Kaufman and Scheidegger, 1999).

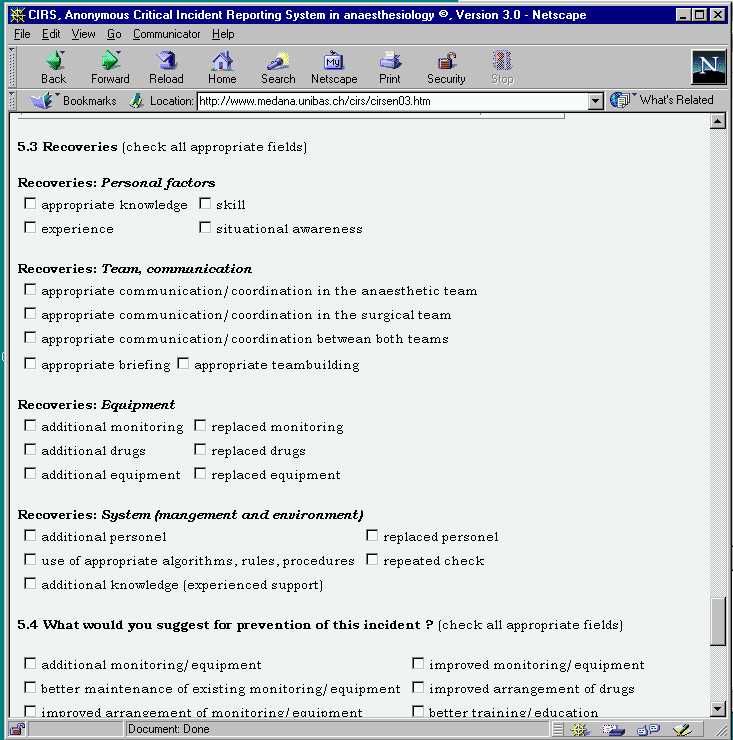

As with the ASRS system and the local scheme, the CIRS reporting form also embodies a number of assumptions about the individuals who are likely to use the form. Perhaps the most obvious is that they must be computer literate and be able to use the diverse range of dialogue styles that are exploited by the system. They must also be able to translate between the incident that they have witnessed and the various strongly typed categories that are supported by the form. For instance, users must select from one of sixteen different types of surgical procedure that are recognised by the system. Perhaps more contentiously they must also characterise human performance along eight Likert scales that are used to assess lack of sleep, amount of work-related stress, amount of non-work related stress, effects of ill or healthy staff, adequate or inadequate knowledge of the situation, appropriate skills and appropriate experience. The introspective ability to independently assess such factors and provide reliable self-reports again illustrates how many incidents reporting forms reflect the designers’ assumptions about the knowledge, training and expertise of the target workforce.

Figure 3: The CIRS Reporting System (Staender,. Kaufman and Scheidegger, 1999)

3. Providing Information to the Respondents

The previous section has illustrated a number of different approaches to the elicitation of information about human "error" and systems "failure". However, these different approaches all address a number of common problems. The first is how to encourage people to contribute information about the incidents that they observe?

3.1 Assurances of Anonymity or Confidentiality. The confidentiality of any report is an important consideration for many potential respondents. Each of the systems presented in the Section 2 illustrates a different approach to this issue. For example, NASA Ames administers the ASRS on behalf of the FAA. They act as an independent agency that guarantees the anonymity of respondents. FAA Advisory Circular Advisory Circular 00-46D states that "The FAA will not seek, and NASA will not release or make available to the FAA, any report filed with NASA under the ASRS or any other information that might reveal the identity of any party involved in an occurrence or incident reported under the ASRS". However, it is important to note that this scheme is confidential in the sense that NASA will only guarantee anonymity if the incident has no criminal implications.

Respondents to the ASRS are asked to provide contact information so that NASA can pursue any additional information that might be needed. Conversely, the local scheme illustrated in Figure 1 does not request identification information from respondents. This anonymity is intended to protect staff and encourage their participation. However, it clearly creates problems during any subsequent causal analysis for reports of human error. It can be difficult to identify the circumstances leading to an incident if analysts cannot interview the person making the report. However, this limitation is subject to a number of important caveats that affect the day to day operation of many local reporting schemes. For instance, given the shift system that operates in many industries and the limited number of personnel who are in a position to observe particular failures it is often possible for local analysts to make inferences about the people involved in particular situations. Clearly there is a strong conflict between the desire to prevent future incidents by breaking anonymity to ask supplementary questions and the desire to safeguard the long-term participation of staff within the system.

The move from paper-based schemes to electronic systems raises a host of complex social and technological issues surrounding the anonymity of respondents and the validation of submissions. The Swiss scheme shown in Figure 3 states that "During your posting of a case there will be NO questions that would allow an identification of the reporter, the patient or the institution. Furthermore we will NOT save any technical data on the individual reports: no E-mail address and no IP-number (a number that accompanies each submitted document on the net). So no unauthorised 'visitor' will find any information that would allow an identification of you or your patient or your institution (not even on our local network-computers) by browsing through the cases". This addresses the concern that it is entirely possible for web servers to record the address of the machine making the submission without the respondent’s knowledge. However, there is also a concern that groups might deliberately distort the findings of a system by generating spurious reports. These could, potentially, implicate third parties. It, therefore, seems likely that future electronic systems will follow the ASRS approach of confidential rather than anonymous reporting.

3.2 Definitions of an Incident?

It is important to provide users with a clear idea of when they should consider making a submission to the system. For example, the local scheme in Figure 1 states that an incident must fulfil the following criteria: "1. It was caused by an error made by a member of staff, or by a failure of equipment. 2. A person who was involved in or who observed the incident can describe it in detail. 3. It occurred while the patient was under our care. 4 It was clearly preventable. Complications that occur despite normal management are not critical incidents. But if in doubt, fill in a form". This pragmatic definition from a long-running and successful scheme is full of interest for researchers working in the area of human error. For instance, incidents, which occur in spite of normal management, do not fall within the scope of the system. Some might argue that this effectively prevents the system from targeting problems within the existing management system. However, such criticisms neglect the focused nature of this local system, which is specifically intended to "target the doable" rather than capture all possible incidents.

In contrast to the local definition which reflects the working context of the unit in which it was applied, the wider scope of the CIRS approach leads to a much broader definition of the incidents under consideration. "Defining critical incidents unfortunately is not straightforward. Nevertheless we want to invite you to report your critical incidents if they match with this definition: an event under anaesthetic care which had the potential to lead to an undesirable outcome if left to progress. Please also consider any team performance critical incidents, regardless of how minimal they seem". It is worth considering the implications of this definition in the light of previous research in the field of human error. For example, Reason (1988) has argued that many operators spend considerable amounts of time interacting in what might be terms a "sub-optimal" manner. Much of this behaviour could, if left unchecked, result in an undesirable outcome. However, operating practices and procedures help to ensure safe and successful operation. From this it follows that if respondents followed the literal interpretation of the CIRS definition then there could be a very high number of submissions. Some schemes take this broader approach one step further by requiring that operators complete an incident form after every procedure even if they only indicate that there had been no incident. The second interesting area in the CIRS definition is the focus on team working. The number of submissions to a reporting system is likely to fall as the initial enthusiasm declines. One means of countering this is to launch special reporting initiatives. For instance, by encouraging users to submit reports on particular issues such as team co-ordination problems. There is, however, the danger that this will lead to spurious attention being placed on relatively unimportant issues if the initiatives are not well considered.

The ASRS forms no longer contain an explicit indication of what should be reported. Paradoxically, the forms contain information about what is NOT regarded as being within the scope of the scheme. "Do not report aircraft accidents and criminal activities on this form". This lack of an explicit definition of an incident reflects the success of the ASRS approach. In particular, it reflects the effectiveness of the feedback that is provided from the FAA and NASA. Operators can infer the sorts of incidents that are covered by the ASRS because they are likely to have read about previous incidents in publications such as the Callback magazine. This is distributed to more than 85,000 pilots, air traffic controllers and others in the aviation industry. Callback contains excerpts from ASRS incident reports as well as summaries of ASRS research studies. This coverage helps to provide examples of previous reporting behaviour. Of course, it might also be argued that apparently low participation rates, for example amongst cabin staff, could be accounted for by their relatively limited exposure to these feedback mechanisms. This raises further complications. In order to validate such hypotheses it is necessary to define an anticipated reporting rate for particular occupational groupings, such as cabin staff. This is almost impossible to do because without a precise definition of what an incident actually is, it is impossible to estimate exposure rates.

3.3 Explanations of Feedback and Analysis

Potential contributors must be convinced that their reports will be acted upon. For example, the local system in Figure 1 includes the promise that: "Information is collected from incident reporting forms (see overleaf) and will be analysed. The results of the analysis and the lessons learnt from the reported incidents will be presented to staff in due course". This informal process is again typical of systems in which the lessons from previous incidents can be fed-back through ad hoc notices, reminders and periodic training sessions. It contrasts sharply with the ASRS approach: "Incident reports are read and analysed by ASRS's corps of aviation safety analysts. The analyst staff is composed entirely of experienced pilots and air-traffic controllers. Their years of experience are uniformly measured in decades, and cover the full spectrum of aviation activity: air carrier, military, and general aviation; Air Traffic Control in Towers, TRACONS, Centres, and Military Facilities. Each report received by the ASRS is read by a minimum of two analysts. Their first mission is to identify any aviation hazards, which are discussed in reports and flag that information for immediate action. When such hazards are identified, an alerting message is issued to the appropriate FAA office or aviation authority. Analysts' second mission is to classify reports and diagnose the causes underlying each reported event. Their observations, and the original de-identified report, are then incorporated into the ASRS's database".

The CIRS web-based system is slightly different from the other two examples. It is not intended to directly support intervention within particular working environments. Instead, the purpose is to record incidents so that other anaesthetists can access them and share experiences. It, therefore, follows that very little information is provided about the actions that will be taken in response to particular reports: "Based on the experiences from the Australian-Incident-Monitoring-Study, we would like to create an international forum where we collect and distribute critical incidents that happened in daily anaesthetic practice. This program not only allows the submission of critical incidents that happened at your place but also serves as a teaching instrument: share your experiences with us and have a look at the experiences of others by browsing through the cases. CIRS© is anonymous". This approach assumes that the participating group already has a high degree of interest in safety issues and, therefore, a motivation to report. It implies a degree of altruism in voluntarily passing on experience without necessarily expecting any direct improvement within the respondents’ particular working environment.

4 Eliciting Information from Respondents

The previous section focussed on the information that must be provided in order to elicit incident reports. In contrast, this section identifies information that forms must elicit from its participants.

4.1 Detection Factors

A key concern in any incident reporting system is to determine how any adverse event was detected. There are a number of motivations behind this. Firstly, similar incidents might be far more frequent than first thought but they might not have been detected. Secondly, similar incidents might have much more serious consequences if they were not detected and mitigated in the manner described in the report.

As before, there are considerable differences in the approaches adopted by different schemes. CIRS provides an itemised list of clinical detection factors. These include direct clinical observation, laboratory values, airway pressure alarm and so on. From this the respondent can identify the first and second options that gave them the best indication of a potential adverse event. It is surprising that this list focuses exclusively on technical factors. The web-based form enables respondents to indicate how teams help to resolve anomalies, however, it does not consider how the user’s workgroup might help in the detection of an incident.

The local scheme of figure 1 simply asks for the "Grade of staff discovering the incident". Even though it explicitly asks for factors contributing and mitigating the incident, it does not explicitly request detection factors. In contrast, ASRS forms reflect several different approaches to the elicitation of detection factors. For instance, the forms for reporting maintenance failures includes a section entitled "When was problem detected?" Respondents must choose from routine inspection, in-flight, taxi, while aircraft was in service at the gate, pre-flight or other. There is, in contrast, no comparable section on the form for Air Traffic Control incidents. This in part reflects the point that previous questions on the Air Traffic Control form can be used to identify the control position of the person submitting the form. This information supports at least initial inferences about the phase of flight during which an incident was detected. It does not, however, provide explicit information about what people and systems were used to detect the anomaly. Fortunately, all of the ASRS report forms prompt respondents to consider "How it was discovered?" in a footnote to the free-form event description on the second page of the report. In the ASRS system, analysts must extract information about common detection factors from the free-text descriptions provided by users of the system.

4.2 Causal factors

It seems obvious that any incident reporting form must ask respondents about the causal factors that led to an anomaly. As with detection factors, the ASRS exploits several different techniques to elicit causal factors depending on whether the respondent is reporting an Air Traffic Incident, a Cabin Crew incident etc. For example, only the Cabin Crew forms ask whether a passenger was directly involved in the incident. It is interesting that the form does not distinguish between whether the passenger was a causal factor or suffered some consequence of the incident. In constrast, the maintenance forms ask the respondent to indicate when the problem was detected; during routine inspection; in-flight, taxi; while aircraft was in service at gate; pre-flight or other. In spite of these differences, there are several common features across different categories in the ASRS. For instance, both Maintenance and Air Traffic reporting forms explicitly ask respondents to indicate whether they were receiving or giving instruction at the time of the incident. Overall, it is surprising how few explicit questions are asked about the causal factors behind an incident. The same footnote that directs people to provide detection information also requests details about "how the problem arose" and "contributing factors". This is an interesting distinction because it suggests an implicit categorisation of causes. A primary root cause for "how the problem arose" is being distinguished from other "contributing factors". This distinction is not followed in the local scheme of Figure 1. The respondent is simply asked to identify "what contributed to the incident". The same form asks specifically for forms of equipment failure but does not ask directly about any organisational or managerial causes.

The web-based CIRS has arguably the most elaborate approach to eliciting the causes of an incident. In addition to a free-text description of the incident, it also requests "circumstantial information" that reveals a concern to widen the scope of any causal analysis. For instance, they include seven Likert scales to elicit information about "Circumstances: team factors, communication". Respondents are asked to indicate whether there was no briefing (1) up to a pertinent and thorough briefing (5). They must also indicate whether there was a major communication/co-ordination breakdown (1) or efficient communication/co-ordination in the surgical team (5). Again, such questions reveal a great deal about the intended respondents and about the people drafting the form. In the former case it reveals that the respondents must be aware of the general problems arising from team communications and co-ordination in order for them to assess its success or failure. In the latter case, such causal questions reveal that the designers are aware of recent literature on the wider causes of human error beyond "individual failure".

4.3 Consequences

Previous paragraphs have shown different reporting systems exploit different definitions of what constitutes an incident. These differences have an important knock-on effect in terms of the likely consequences that will be reported to the system. For instance, the distinction between the incident and accident reporting procedures of the FAA will ensure that no fatalities will be reported to the ASRS. Conversely, the broader scope of the CIRS definition ensures that this scheme will capture incidents that do contribute to fatalities. This is explicitly acknowledged in the rating system that CIRS encourages respondents to use when assessing the outcomes of an incident: Transient abnormality - unaware for the patient; Transient abnormality with full recovery; Potential permanent but not disabling damage; Potential permanent disabling damage; Death (Lack, 1990). This contrasts with the local system that simply provides a free text area for the respondent to provide information about "what happened to the patient?" The domain dependent nature of outcome classification is further illustrated by maintenance procedures in the ASRS. Here the respondent is asked to select from: flight delay; flight cancellation; gate return; in-flight shut-down; aircraft damage; rework; improper service; air turn back or other.

The distinction between immediate and long-term outcomes is an important issue for all incident-reporting schemes. The individuals who witness an incident may only be able to provide information about the immediate aftermath of an adverse event. However, human ‘error’ and system ‘failure’ can have far more prolonged consequences. This is acknowledged in the Lack scale of prognoses used in the CIRS system. Transient abnormalities are clearly distinguished from permanently disabling incidents. The other schemes do not encourage their respondents to consider these longer-term effects so explicitly. In part this can be explained by the domain specific nature of consequence assessments. The flight engineer may only be able to assess the impact of an incident to the next flight. Even if this is the case, it is often necessary for those administering the schemes to provide information about long-term effects to those contributing reports. This forms part of the feedback process that warns people about the potential long-term consequences of future incidents.

4.4 Mitigating factors

Several authors argue that more attention needs to be paid to the factors that reduce or avoid the negative consequences of an incident (van der Schaaf 1996, Reason 1998). These factors are not explicitly considered by most reporting systems. There is an understandable focus on avoiding the precursors to an incident rather than mitigating its potential consequences. For instance, the ASRS forms simply ask respondents to consider "Corrective Actions" as a footnote to the free text area of the form shown in Figure 2. Similarly, the local form shown in Figure 1 asks respondents to describe "what factors minimised the incident".

The CIRS again takes a different approach to the other forms. Rather than asking the user to describe mitigating factors in the form of free-text descriptions, this system provides a number of explicit prompts. It asks the respondent to indicate whether personal factors such as "appropriate knowledge, skill, experience or situational awareness" were recovery factors. The form also asks for information about ways in which equipment provision and team co-ordination helped to mitigate the consequences of the failure. Questions are also asked about the role of management and the working environment in recovery actions. Such detailed questions can dissuade people from investing the amount of time that is necessary to complete the 20 fields that are devoted to mitigating factors alone. Of course, the trade-off is that the other schemes may fail to elicit critical information about the ways in which managerial and team factors helped to mitigate the consequences of an incident.

4.5 Prevention

Individuals who directly witness an incident can provide valuable information about how future adverse events might be avoided. However, such individuals may have biased views that are influenced by remorse, guilt or culpability. Subjective recommendations can also be biased by the individual’s interpretation of the performance of their colleagues, their management or of particular technical subsystems. Even if these factors did not obscure their judgement, they may simply have been unaware of critical information about the causes of an incident. In spite of these caveats, many incident reporting forms do ask individuals to comment on ways in which an adverse event might have been avoided. The local system in Figure 1 asks respondents to suggest "how might such incidents be avoided". This open question is, in part, a consequence of the definition of an incident in this scheme which included occurrences "that might have led (if not discovered in time) or did lead, to an undesirable outcome". This definition coupled with the request for prevention information shows that the local system plays a dual role both in improving safety "culture" but also in supporting more general process improvement. This dual focus is mirrored in the CIRS form; "What would you suggest for prevention of this incident? (check all appropriate fields): additional monitoring/equipment; improved monitoring/equipment; better maintenance of existing monitoring/equipment; improved arrangement of drugs; improved arrangement of monitoring/ equipment; better training/ education; better working conditions; better organisation; better supervision; more personnel; better communication; more discipline with existing checklists; better quality assurance; development of algorithms / guidelines; abandonment of old 'routine'". This contrasts with the local system in which "complications which occur despite normal management are not critical incidents but if in doubt fill in a form". Under the CIRS definition, failures in normal management would be included and so must be addressed by proposed changes.

The ASRS does not ask respondents to speculate on how an incident might have been avoided. There are several reasons for this. Some of them stem from the issues of subjectivity and bias, mentioned above. Others relate to the subsequent analytical stages that form part of many incident-reporting systems. An important difference between the ASRS and the other two schemes considered in this paper is that it is confidential and not an anonymous system. This means that it is possible for ASRS personnel to contact individuals who supply a report to validate their account and to ask supplementary questions about prevention factors. CIRS does not provide direct input into regulatory actions. Instead, it aims to increase awareness about clinical incidents through the provision of a web based information source. It, therefore, protects that anonymity of respondents and only has a single opportunity to enquire about preventative measures. In the local system, the personnel who administer the system are very familiar with the context in which an incident occurs and so can directly assess proposed changes to working practices.

5. Further Work and Conclusions

There has been a rapid growth in the use of incident reporting schemes as a primary means of preventing future accidents. However, the utility of these systems depends upon the forms that are used to elicit information about potential failures. This paper, therefore, uses a comparative study of existing approaches to identify key decisions that must be made during the design of future documents. Much work remains to be done. At one level, the various approaches of the ASRS, CIRS and the local system have been validated by their success in attracting submissions. At another level, there is an urgent need for further work to be conducted into the validation of specific approaches. For instance, it is unclear whether techniques from the CIRS system might improve the effectiveness of the local system or vice versa. This work creates considerable ethical and methodological problems. Laboratory experiments cannot easily recreate the circumstances that lead to incident reports. Conversely, observation analysts may have to wait for very long periods before they can witness an incident within a real working environment. The lack of research in this area has led to a huge diversity of reporting forms across national boundaries and within different industries. We urgently need more information about the effects that different approaches to form design have upon the nature and number of incidents that are reported to these systems.

Acknowledgements

Thanks are due to the members of the Glasgow Interactive Systems Group and the Glasgow Accident Analysis Group. This work is supported, in part, by a grant from the UK Engineering and Physical Sciences Research Council.

REFERENCES

D. Busse and C.W. Johnson, Human Error in an Intensive Care Unit: A Cognitive Analysis of Critical Incidents. In J. Dixon (editor) 17th International Systems Safety Conference, Systems Safety Society, Unionville, Virginia, USA, 138-147, 1999.

D.K. Busse and D.J. Wright, Classification and Analysis of Incidents in Complex, Medical Environments. To appear in Topics in Healthcare Information Management, 20, 2000.

A. Hale, B. Wilpert and M. Freitag, After the Event: From Accident to Organisational Learning. Pergammon, New York, 1997.

L.A. Lack Preoperative anaesthetic audit. Baillières Clinical Anaesthesiology 1990; 4:171-84.

J. Reason, Managing the Risks of Organisational Accidents, Ashgate, Aldershot, 1998.

S. Staender, M. Kaufman and D. Scheidegger, Critical Incident Reporting in Anaesthesiology in Switzerland Using Standard Internet Technology. In C.W. Johnson (editor), 1st Workshop on Human Error and Clinical Systems, Glasgow Accident Analysis Group, University of Glasgow, 1999.

T. van der Schaaf, PRISMA: A Risk Management Tool Based on Incident Analysis. International Workshop on Process Safety Management and Inherently Safer Processes, October 8-11, Orlando, Florida, 242-251, 1996.

T. van der Schaaf, Human recovery of Errors in Man-Machine Systems. In CCPS’96: Process Safety Management and Inherently Safer Processes, American Institute of Chemical Engineers, Florida, USA, 1996a.

W. van Vuuren, Organisational Failure: An Exploratory Study in the Steel Industry and the Medical Domain, PhD thesis, Technical University of Eindhoiven, Netherlands, 1998.