DIP5 Example Question

†Question 1

(a) Suppose we have an image type with range 0..255, and we given a one dimensional array H of real numbers with 256 entries. Hi contains the number of occurrences of pixel value i that have occurred in the last 1000 images of this type to have been captured by some imaging equipment. Give an algorithm that will construct an optimal scalar quantisor for these images such that the quantised pixels can be stored in 4 bits.

[15]

Question 2

(a) Recovery of depth information from images can be achieved using two images captured simultaneously from two† cameras:

i†††††† Explain why it is not possible to recover depth from a single image by inverting the perspective projection equation. Where (xp,yp) and (Xp,Yp,Zp) are the image plane and world coordinates respectively and f is the distance from the perspective centre to the image plane:

[2]

ii††††† Using diagrams show the difference between parallel and converged imaging geometry, outline how you would recover depth from images captured using either of these configurations and explain the relative advantages of these stereo imaging configurations.

†[6]

(b) Solving the stereo correspondence problem is key to recovering depth from stereo pair images:

i†††††† Write the high-level pseudo code to implement a stereo matching algorithm based on statistical correlation and refinement through a multi-resolution scale-space.

[7]

Question 3

(a) The human retina contains two types of photoreceptor, the rod and the cone, set within a space-variant tessellation:

i†††††† What is the spatial arrangement of photoreceptors in the retina? How do the relative densities of rods and cones vary over the retina and what implications does this arrangement have for night vision and colour perception?

[4]

ii††††† Ganglion cells encode the signals from the eye prior to entering the optic nerve. Explain using a diagram what mathematical function can be used to model the Ganglion cells which encode signals originating in the fovea? What two receptive field configurations do these cells exhibit and how can the above mathematical function model these configurations? What function would this cell appear to be performing from an image processing perspective?

†[5]

(b)

In humans and mammals, a geometric transformation appears to occur

in the visual pathway between the retina and the Primary Visual Cortex that can

be modelled approximately using the Complex Log Transform:![]() ,

, ![]() ,

where w and z are complex variables representing points on the

cortex (u,v) and retina (x,y) respectively.

,

where w and z are complex variables representing points on the

cortex (u,v) and retina (x,y) respectively.

i†††††† Using a diagram explain how the above transformation maps circles and radial lines centred in the retina plane to orthogonal straight lines in the cortex.

[3]

ii††††† Using a diagram explain how this transformation affords a degree of invariance to scale, rotation and ground-plane projection on the retina plane.

[3]

Answer 1

Suppose we have an image type with range 0..255, and we given a one dimensional array H of real numbers with 256 entries. Hi contains the number of occurrences of pixel value i that have occurred in the last 1000 images of this type to have been captured by some imaging equipment. Give an algorithm that will construct an optimal scalar quantisor for these images such that the quantised pixels can be stored in 4 bits.

The algorithm works by recursively splitting the histogram on its mean. Each time it splits the histogram it adds one bit to the number pos such that all quantisation codes less than the new value of pos are less than the mean just discovered. This partitions the histogram into sub histograms to which the process is recursively applied:

procedure q ( pos ,fac ,bit :range ;i ,j :integer );

††††††††††† var

Let n, m, m ő real;

begin

n¨ Ś hi..j ;

m¨ Ś (hi..j) ◊ (i + i 0 ) ;

m¨ [(m)/(n)];

if bit < 0 then tpos¨ m

else

begin

q (pos, [(fac)/2], bit - 1, i, round(m));

q (pos + fac, [(fac)/2], bit - 1, round(m) + 1, j);

end ;

††††††††††† end ;

Answer 2

(c) Recovery of depth information from images can be achieved using two images captured simultaneously from two pairs of cameras:

i†††††† Explain why it is not possible to recover depth from a single image by inverting the perspective projection equation.† Where (xp,yp) and (Xp,Yp,Zp) are the image plane and world coordinates respectively and f is the distance from the perspective centre to the image plane:

††††††† Inverting the perspective projection equation leads only to the formulation of a line in space that runs from the imaging plane via the perspective centre of the camera as the 3rd dimension is lost when projecting to the imaging plane.

[2]

ii††††† Using diagrams show the difference between parallel and converged imaging geometry, outline how you would recover depth from images captured using either of these configurations and explain the relative advantages of these stereo imaging configurations.

Parallel

geometry Image

Plane Image

Plane

|

†††††††

|

Using either parallel or converged imaging geometries, the basic principle of depth recovery involves discovering the precise geometric configuration of the cameras, usually by a process of calibration. This involves recovering the intrinsic camera parameters: focal length (principal distance) and principal point and also recovering the extrinsic camera parameters, their baseline translations and relative (convergence) angles, (6 degree of freedom rigid body translation and rotations: space resection). Knowing this information a space intersection can be performed if the stereo correspondence between the image planes has been established. Space intersection comprises depth recovery by finding the 3D world intersection of lines of projection originating from corresponding points in each camera, i.e. depth by triangulation.

†[6]

(d) Solving the stereo correspondence problem is key to recovering depth from stereo pair images:

i†††††† Write the high-level pseudo code to implement a stereo matching algorithm based on statistical correlation and refinement through a multi-resolution scale-space.

1. Construct an image bandpass pyramid of N levels each separated by a factor of S in scale.

2. Initialise to zero X and Y disparity maps for pyramid level N scale

3. For each level N of the pyramid, staring at the coarsest level, N, do:

a. Warp the test image into alignment with the reference image using the previously computed X,Y disparity maps (no action first time round)

b. For each pixel in the reference image do:

i. Perform statistical correlation between patches centred on each pixel in the reference image with the corresponding location and its 4 nearest neighbours.

ii. Compute current disparity Xc,Yc offsets to sub-pixel resolution by fitting orthogonal parabolas (in the x and y axis directions)† to the correlations scores returned for the locations in i. and find the turning points of the parabolas (to get sub-pixel disparity estimates).

iii. Accumulate the current Xc,Yc maps into the X,Y disparity maps.

c. Perform a pyramidal expansion by a factor S of the X and Y disparity maps and multiply (scale) their values by S

d. Start the process at a. for the next level, N-1, of the pyramid or terminate if N < 0.

[7]

Answer 3

(a) The human retina contains two types of photoreceptor, the rod and the cone, set within a space-variant tessellation:

i†††††† What is the spatial arrangement of photoreceptors in the retina? How do the relative densities of rods and cones vary over the retina and what implications does this arrangement have for night vision and colour perception?

††††††† The photoreceptors of the retina are organised in an approximately regular hexagonal tessellation in the centre (fovea) of the retina that becomes exponentially less densely sampled towards the periphery of the retina. The cone receptors are concentrated in the fovea and their density falls off towards the retina periphery, the rods follow a complementary arrangement, there being no rods at all in the foveal pit. This implies that fixating directly objects on our fovea is not a good strategy for optimum night vision and that our colour perception falls off with visual angle.

[4]

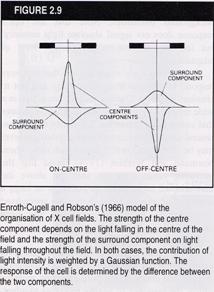

ii††††† Ganglion cells encode the signals from the eye prior to entering the optic nerve. Explain using a diagram what mathematical function can be used to model the Ganglion cells which encode signals originating in the fovea? What two receptive field configurations do these cells exhibit and how can the above mathematical function model these configurations? What function would this cell appear to be performing from an image processing perspective?

††††††† Ganglion cells can be modelled using an Difference of Gaussians function, to model the human P cell found in the fovea. The two Gaussian subfields oppose to produce on-centre off surround or off-centre on surround receptive field varieties. This cell appears to be computing a spatial bandpass filter.

†[5]

(b)

In humans and mammals, a geometric transformation appears to occur

in the visual pathway between the retina and the Primary Visual Cortex that can

be modelled approximately using the Complex Log Transform:![]() ,

, ![]() ,

where w and z are complex variables representing points on the

cortex (u,v) and retina (x,y) respectively.

,

where w and z are complex variables representing points on the

cortex (u,v) and retina (x,y) respectively.

i†††††† Using a diagram explain how the above transformation maps circles and radial lines centred in the retina plane to orthogonal straight lines in the cortex.

††††††† Circles centred on the retina plane have constant distance log |z| and linerarly increasing angle v = iarg z therefore map to a vertical line in the cortex. Radial lines centred on the retina have constant angles and monotonically increasing u = log|z| components, therefore map to vertical lines in the cortex. Figure to show.

[3]

ii††††† Using a diagram explain how this transformation affords a degree of invariance to scale, rotation and ground-plane projection on the retina plane.

††††††† Any contour centred on the retina will appear to shift with angle and scale change along orthogonal axes in the cortex. If the vanishing points of a ground-plane converge at the centre of the retina, then tiles of equal area on the ground plane will map to approximately equal areas in the cortex.

[3]